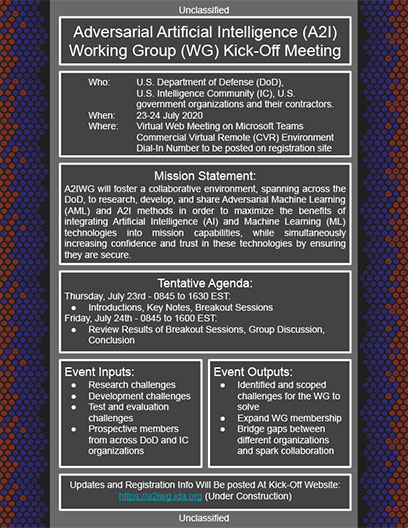

| Dates: | July 23–24, 2020 |

| Location: | Virtual Workshop (DoD CVR Teams meeting information emailed to registrants who are Government or Government Contractors) |

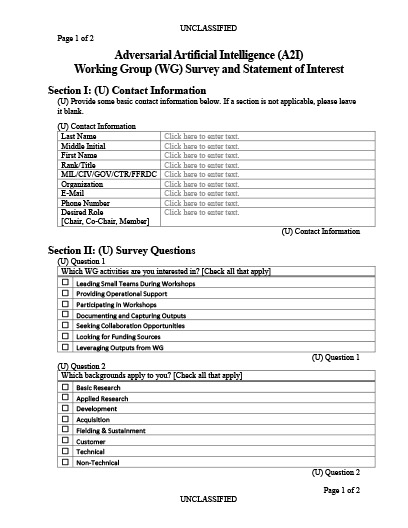

| Registration: | Registration is Now Closed |

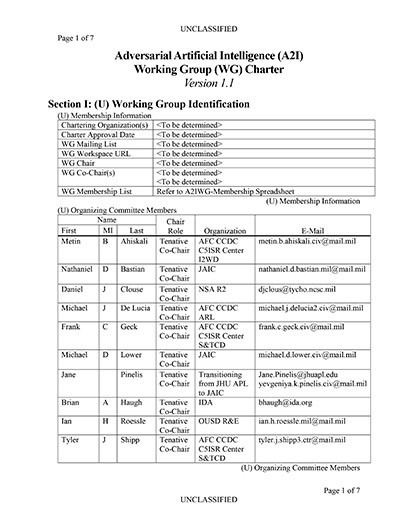

| Steering Lead: | Dr. Jill Crisman, Technical Director for Artificial Intelligence, OUSD (R&E) |

| A2IWG Chair: | (TBD) |

Main Kick–Off Agenda

The main kick–off event will be held virtually on Microsoft Teams / Commercial Virtual Remote (CVR) Environment over two days from July 23rd to July 24th. Discussions will be organized into three topics and held in a series. A tentative schedule is provided below.

Schedule Update: One of the breakout sessions was dropped. The remaining three sessions are now being held sequentially.

Day 1 — Thursday, July 23 Schedule

| Time | Activity |

|---|---|

| 0845 | Arrival / Sign–in |

| 0900 | Welcome / Introductory Remarks |

| 0915 | Keynote — by Dr. Jill Crisman, Technical Director for Artificial Intelligence OUSD(R&E) |

| 1000 | IDA Briefing on A2I/AML |

| 1030 | Break |

| 1045 | DoD/IC A2I Activity Introductions |

| 1130 | Introduce Breakout Session Topics |

| 1200 | Lunch |

| 1300 | Initial Topic 1 (AI Trusted Robustness) Group Discussion |

| 1400 | Break |

| 1410 | Initial Topic 2 (AI System Security) Group Discussion |

| 1510 | Break |

| 1520 | Initial Topic 3 (A2I Foundational Gaps) Group Discussion |

| 1620 | Concluding remarks |

| 1630 | Conclude |

Day 2 — Friday, July 24 Schedule

| Time | Activity |

|---|---|

| 0845 | Arrival / Sign-in |

| 0900 | Welcome / Introductory Remarks |

| 0915 | Keynote — by Dr. Daniel Ragsdale, Assistant Director for Cyber OUSD(R&E) |

| 1000 | Recap Day 1 Topic 1 (AI Trusted Robustness) Group Discussion |

| 1030 | Continue Topic 1 (AI Trusted Robustness) Group Discussion |

| 1130 | Lunch |

| 1230 | Recap Day 1 Topic 2 (AI System Security) Group Discussion |

| 1300 | Continue Topic 2 (AI System Security) Group Discussion |

| 1400 | Break |

| 1410 | Recap Day 1 Topic 3 (A2I Foundational Gaps) Group Discussion |

| 1440 | Continue Topic 3 (A2I Foundational Gaps) Group Discussion |

| 1540 | Concluding Remarks |

| 1600 | Conclude |

Disclaimer for Participation in Discussions

The event is held at an Unclassified level where material, content and discussions are restricted to Distribution C (Distribution authorized to U.S. Government agencies and their contractors; Critical Technology; 16 July 2020). Classified discussions will be held during other events in the future.

Microsoft Teams has additional restrictions that prevent discussing or presenting: Controlled Technical Information (CTI), Personally Identifiable Information (PII), or anything above the classification of the Controlled Unclassified Information (CUI).

Information that cannot be discussed during the event:

- Classified information

- Personally Identifiable Information (PII)

- Controlled Technical Information (CTI)

- Department of Defense (DoD) only information

- Government only information

Examples of information that can be discussed during the event:

- High–level conceptual information

- Controlled Unclassified Information that is not CTI, PII or for government only

- Public information from industry, academia, research institutions, etc.

- Information that has been approved for public release

- Otherwise uncontrolled and unclassified information

KEY OUTCOMES

Information Sharing

Community Awareness

Networking/Collaboration Opportunities

Initialize the Adversarial Artificial Intelligence Working Group

Key Outcomes from WG and Kick-Off Event:

EVENT DETAILS

Other A2IWG information can be found in documents posted on this event website: https://a2iwg.ida.org/

Microsoft Teams and Phone Line information will be sent out prior to the event to those that have registered.

Kick–Off Breakout Session Topics

Below are proposed breakout session topics. These topics will be further refined and scoped during our pre–kick–off event series. Definitions below are simply starting points and are flexible and subject to change.

Topic 1: AI Trusted Robustness

Robustness Metrics/Certifications

AI Trustworthiness

Privacy and Confidentiality

Test and Evaluation

How should these results be certified?

Is there a one–size–fits–all approach to metrics or will there be many approaches? If there are many approaches, how will the appropriate one be selected?

Subgroup discovery — data mining technique — a finer grained analysis on models and their features — avoid using high level metrics such as accuracy

What specific questions are test and evaluation teams going to need answered?

How does this relate to explainable AI? Improved understanding of how features and labels map to each other will make it easier to predict what type of A2I/AML risks may be present.

How does this relate to ethical AI? Possibility to leverage metrics

Probability of attack success? (Pseudo–Non–Deterministic models vs Static–Deterministic Models)

Results that guide stakeholders into making effective decisions. Do not want to steer them away from good products when the risk is acceptable.

How should this be factored into the Authority to Operate (ATO) process? Should products need to be reassessed when this is added?

Impact on decision support

Assessing software supply chain — Software composition analysis (Github repos, Docker/Kubernetes containers) (Tampering with math/algorithms such as optimizers)

Which A2I/AML methods should be used to assess a model? Should there be specific methods to assess the effectiveness of various defenses?

Assessing data supply chain (Source of data, trustworthiness of source, data provenance) (Chain of custody throughout entire machine learning workflow/life–cycle)

Defense through obscurity is not viable

Topic 2: AI System Security

Algorithmic Robustness

Adversarial Training

Label Smoothing Defense

Feature De–noising

Ensemble Classifiers Using Different Features

NIST 8269 — A Taxonomy and Terminology of AML

https://csrc.nist.gov/publications/detail/nistir/8269/draftSecure System Engineering

Monitor and Track Requests

Monitor Streaming Data

Obfuscate Predictions

Knowledge framework for modeling threats

Knowledge framework for modeling potential impact and risk to systems

Assessing data supply chain (Source of data, trustworthiness of source, data provenance) (Chain of custody throughout entire machine learning workflow/life–cycle)

An example from industry: Microsoft Blog — Threat Modeling AI/ML Systems and Dependencies

https://docs.microsoft.com/en–us/security/engineering/threat–modeling–aiml

Topic 3: Adversarial Artificial Intelligence Foundational Gaps

AI model characteristics exploited by adversarial attacks

Model differentiability

Feature Selection

Richness of model output

Transfer learning

Adversarial Robustness and explainable AI

Adversarial examples are bugs or features?

Gap between human and machine perception

Adversarial AI for supervised approaches (Mullin)

Feature Collision

Convex Polytope

Clean Label Backdoor

Hidden Trigger Backdoor

Adversarial AI for anomaly detection approaches

Self–supervised learning (e.g., NLP)

Unsupervised learning (e.g., Clustering)

Evasion

Data poisoning

Adversarial AI for Reinforcement Learning

Dependent on simulated environments

Influence action path of RL system

Adversarial policies

Model Decay vs. Model Attack

Online Learning

A/B Testing

Real world attacks — model or hardware

Electromagnetic

Visual spoofing

Anomaly normalization

Risk Evaluation & Mitigation of model failure

Ensemble

Hidden models

Orthogonal mode pairing